Vitalis 2015 var i grunden helt annorlunda än tidigare år. Nu menar jag inte så mycket just föreläsningarna som alltför ofta fortfarande var fulla av bullet points. Å andra sidan, oväntat många föreläsare skippade powerpoint helt, använde Prezi, eller hade strömlinjeformade presentationer. Vi såg bara några få presentationer eftersom det fanns så mycket intressant på mässgolvet och det är verkligen en nyhet. Förr var mässan extremt förutsägbar och tråkig, bara stora gemensamma system som alla lovade samma saker och levererade rätt lite.

Category Archives: iotaMed

Vi går åt fel håll

Två sätt

Grovt talat finns det två sätt att förbättra vården. Det ena sättet är att se till att vi ställer bättre diagnoser och ger bättre behandlingar. Det andra sättet är att optimalisera själva vårdprocessen och resurserna.

Processen

För att kunna optimalisera process och resurser krävs insyn i vad som pågår i det dagliga arbetet. Det krävs mätvärden och översikter. Med dessa mätvärden och översikter kan man hitta flaskhalsar och ineffektiva subprocesser. Man kan också verifiera att förändringar av processer verkligen leder till besparingar och/eller förbättringar i den levererade kvalitén. Utan den insynen i processen jobbar man i blindo.

I den Svenska sjukvården är det förnämsta mätinstrumentet den information som finns i den medicinska journalen. Rätt utformad kan den ge en insyn i patienternas behov på alla nivåer, från individen upp till större befolkningsgrupper. Behoven kan analyseras över tid och geografi och resurser kan ledas till de platser den behövs bäst. Desto bättre journalinformationen är strukturerad, desto mer detaljerad och användbar blir denna information.

Om informationen är tillräckligt tillförlitlig och fullständig kan vi även detektera på vilka ställen i processen det går fel. Vi kan då också sätta in extra resurser eller utbildning för att få de mindre väl presterande delprocesserna upp till samma nivå som de bättre.

Vad som krävs för detta är alltså journalinformation som är såpass strukturerad och standardiserad att vi kan göra automatiska jämförelser mellan patienter, vårdgivare och vårdorsaker över tid och plats. Inte bara behöver systemen vi använder vara baserade på logiskt strikta informationsstrukturer; vi måste också se till att användarna förser dessa system med fullständiga och korrekta data. Allt som står i vägen för fullständig och tillförlitlig datainsamling måste åtgärdas, eftersom detta är crucialt för att vi ska uppnå bra processtyrning och resultat.

Är det sant?

Eller… är det verkligen sant? Kan vi verkligen förbättra vårdprocessen om vi har bättre strukturerade data och kan få användarna till att förse oss med fullständig information? Jo, det kan vi nog, men hur mycket och till vilket pris?

Är det någon som vet hur mycket vi kan förbättra vården med hjälp av mätningar och justeringar av processen och resurserna? Hur många procent?

Är det någon som funderat på kostnaden? Jovisst, vi vet vad systemen kostar, men vad kostar det vårdpersonalen att mata systemen med data? Vad måste offras upp för att lämna tillräcklig tid och energi för datainmatning? Vilka andra verksamhetssystem har offrats upp för att bygga och köpa system som ger insyn i processen? Vad kunde vi gjort istället?

Det verkliga problemet

Det andra sättet att förbättra vården är att förbättra själva diagnosställningen och behandlingen. När man läser journaler och patienters historia om vägen genom vården slås man av hur ofta processen har gått fel eftersom man missat en detalj. När en diagnos missats eller fastställts långt senare än nödvändigt är det nästan aldrig ren okunskap hos läkaren som varit skälet, utan en brist på konsekvens och detaljmedvetenhet. Man har alltför snabbt dragit en slutsats om en diagnos utan att ha exkluderat alla andra relevanta alternativ och fortsatt på fel väg alldeles för länge. Man har ofta använt en behandling som inte längre anses optimal, eller man har använt en behandling som inte går ihop med en annan diagnos eller behandling som man inte var uppmärksammad på.

De fel man ser i journalerna är så gott som alltid av den typen. Det är samma typ av fel som piloter begick innan man införde checklistor. Ingenting i pilotens checklista är nytt för piloten. Han lär sig inte flyga från en checklista, men han har en signifikant chans att ändå glömma något av stegen om han inte använde checklistan. Det är precis samma sak som händer i alltför många fall i vården.

Att fånga upp det här problemet kräver helt andra verktyg än de strukturerade journalsystem som behövs för processuppföljning. Det kan vara frestande att tro att ett välstrukturerat journalsystem automatiskt kan hjälpa till med analys och förslag på diagnoser och behandlingar, men den struktur som krävs för detta är helt annorlunda än den som krävs för processtyrning.

I dagens system har specialiseringen av journalsystem för processtyrning gått så långt att de undantränger möjligheterna för systemen att supportera diagnos och behandling. Jag tror mig kunna påstå att den netto positiva effekten av bättre processanalys och styrning vi har i dagens system överskuggas av den negativa effekten på diagnos och behandling. Inte för att nuvarande system direkt försämrar diagnos och behandling, men det uteblivna stödet tillsammans med den snabbt ökande komplexiteten i diagnoser och behandlingar gör att vi relativt talat halkar allt längre efter. Vad vi behöver är snabbt ökande stöd för diagnostik och behandling, men vad vi får är mer och mer datastrukurer som endast tjänar till processuppföljning.

Man kan ju argumentera att processtyrning är tjänstemännens sak, medan diagnostik och behandling är läkarnas och sjuksköterskornas och att vi följaktligen får ta hand om det själva. Men samtidigt är det enbart tjänstemännen som har resurserna och makten att definiera systemen och köpa in dem. Med den makten följer också plikten att se till att de viktigaste behoven tillgodoses, och de viktigaste behoven är inte processtyrningen. Det viktigaste behovet är att hantera den alltmer komplexa diagnostiken och behandlingen. Den potentiella vinsten i pengar, effektivitet och mänskligt välbefinnande, är många gånger större vid en satsning på diagnostik och behandling än de relativt marginella förbättringar vi kan förvänta oss av ytterliga vässning av processer.

Tänk om

Så, konkret, hur gör vi det här bäst? Hur stödjer vi en bättre diagnostik och behandling på bästa sätt?

Det första stora steget är att ändra fokus från ”vad vi har gjort med patienten” till ”vad tänker vi göra med patienten” och ”vad är problemet vi hanterar”. Det är skrämmande att nästan inget befintligt system idag i Sverige ens har begreppet ”problem” eller ”ärende”. Det är som om själva orsaken till vården är en bisak, och det är den ju om man helt koncentrerar sig på processen. Försök själv att hitta alla notat, remisser eller recept i en journal som har med en viss sjukdom att göra. Det borde ju vara det första man, medicinskt talat, kan göra.

Ta vilket nuvarande system som helst och försök få en bild av vad som gjorts för diagnostik av en sjukdom, vad som borde ha gjorts men inte gjorts än, och inte minst, vad var skälet till att vissa synbarligen självklara saker inte gjordes.

Försök ta reda på vart en remiss för t.ex. ultraljud hjärta på en 12-åring ska skickas. Är det kardiologen? Barnkardiologen? Barnläkare? Radiolog? Klinisk fysiologi? När du sen oundvikligen får remissen i retur, kan du skicka den igen till rätt person? Eller måste du skriva om den från början? När du får ett svar, kan du be om kompletterande information via en konversation, eller måste du skriva en remiss för varje liten fråga, eller behöver du ta till telefonen för det?

Issues/Ärende

Om vårddokumentationen istället är centrerad runt ”ärende”, t.ex. ”blåsljud på hjärtat”, så kan detta ärende innehålla länkar till remissinstanser som gäller just för det problemet. Jag skulle inte behöva gissa när och var jag behöver remittera. (Det skulle ju hjälpa om remisserna tillät en konversation också, förstås.)

Dessa ärende definitioner (issue templates) ska inte vara en del av programvaran, men ska ha sitt ursprung i verksamheten själv. Med andra ord, när jag har bestämt mig för att jag hanterar blåsljud på hjärtat på ett visst sätt, så ska jag kunna definiera det i en issue template för att senare kunna påminna mig själv om alla detaljer och på så sätt bli mera konsekvent och fullständig i mitt arbete. Ändrar jag mina planer senare, så anpassar jag själv mina templates. Det ger mig också friheten att använda andra templates från mina kollegor och att dela mina templates med dem.

Viktigt är också att de kliniska data som förs in i en mall återanvänds i alla andra mallar för samma patient där samma kliniska data behövs. På så sätt strömlinjeformas arbetet.

Fler konsekvenser

Om läkaren kan använda ett ärendebaserat system så uppstår en hel rad fördelaktiga följder nästan utan ansträngning:

- Dokumentationen kan skapas automatiskt från innehållet i en ärendemall.

- Multipla ärenden som vid multisjuka blir alltmer lätthanterliga eftersom man undviker repetitioner av kliniska data.

- Kliniska data blir välstrukturerade, inte utifrån ett i efterhand pålagt statistiskt perspektiv men från ett betydligt relevantare kliniskt perspektiv. Dessa data är inte bara mer verklighetsanknytna, men även mer lämpade för analys.

- Interaktioner mellan olika sjukdomstillstånd kan detekteras automatiskt. Detta är helt frånvarande i nuvarande system eftersom de inte har begreppet ”sjukdom”.

- Kvalitetsregisterrapportering och smittskyddsrapportering kan göras helt automatiskt utan extra input från vårdpersonal.

- Patientens bidrag till journalen, och insyn i journalen, kan struktureras via separata patientmallar för varje enskild typ av ärende. På så sett ser patienten samma information som läkaren, men i ett annat urval och i en anpassad kontext.

Ett exempel

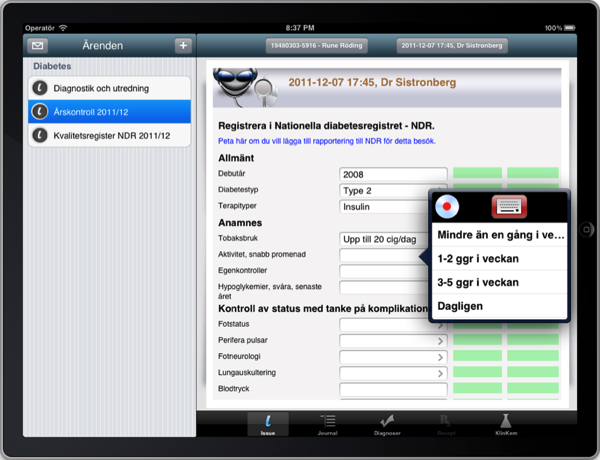

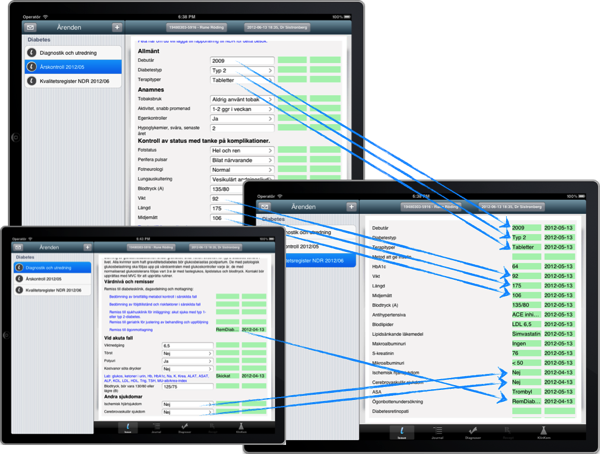

Så hur skulle ett ärendebaserat system se ut? Det bästa (enda?) exemplet är vårt eget iotaMed, ett ärendebaserat system som går på iPads. En typisk skärm ser ut så här:

Vad vi ser här är en struktur som är uppställd ur ett kliniskt perspektiv. Endast data som är meningsfylld i den direkta vården av patienten förekommer här. Genom att de vanligaste data kan föras in väldigt snabbt, med en dubbel tap, går det betydligt snabbare att gå igenom en klinisk undersökning än med gängse journaler.

Eftersom kliniska data är delade mellan olika ärenden blir ifyllandet av kvalitetsregister och andra rapporteringar betydligt enklare, till och med helt automatiska.

Vad innebär ett sånt här system för läkaren? Det innebär att läkaren kan använda hela sin tid till patienten och till ett verktyg som ger omedelbar hjälp med detaljarbetet och samtidigt påminner läkaren om detaljer som är svåra att alltid komma ihåg. Det minskar läkarens kognitiva belastning samtidigt som det ökar kvalitén i arbetet. Det låter läkaren använda datorn till att hämta vetenskaplig information på rätt ögonblick och på rätt plats, samt låter henne använda hjärnan och den presenterade informationen till att göra bättre bedömningar. Datorn är oslagbar när det gäller detaljer och konsekvens, medan läkaren är oslagbar när det gäller bedömningar. Det är så rollfördelningen ska vara.

Idag ser vi ofta initiativ där rollerna omvänds. Där läkaren sätts i situationen att mata maskinen med data för att maskinen sedan ska dra slutsatser. Det vill säga att vi låter både läkaren och maskinen göra det de är sämst rustade att göra, medan vi lämnar alldeles för lite tid över för läkaren att göra sitt jobb.

Vi ser ofta en övertro på nyttan av patientinformation för vården. Jovisst, vi ska veta vad som hänt patienten, och jovisst, vi läkare är de första som gnäller när den informationen inte finns, och jovisst kan vården bli sämre om informationen saknas. Men det betyder inte att den blir bättre av mer information och mycket bättre av mycket mer information. Vad vi ser idag, med den överflöd av information vi har i journalsystemen, är det snarare så att vården försämras. Vi har inte längre möjligheten att tillgodogöra oss den enorma mängd av lågkvalitativ information vi får om en patient. Blotta försöket att använda den tränger bort nyttigare åtgärder.

Tänk så här

Vi måste sluta med att registrera historisk patientinformation för informationens skull. Vi måste sluta att använda vårdpersonal som data input operatörer. Vi måste skaka av oss idén att mängden information står i relation till kvalitén. Vi måste också flytta tonvikten från processmätningar till förbättring av diagnostik och behandling.

Vi måste börja med verktyg som är strukturerade för att direkt förbättra diagnostik och behandling, och från dessa data samla in processmätningar. Vården måste komma först, processtyrning efter det. Det är endast på det viset vi kan utnyttja läkare och sköterskor till fullo i verklig patientvård, men ändå få bra mätdata för storskaliga processer.

Yet another argument for iotaMed

Physicians who have electronic health record systems but dictate patient notes give a lower quality of care than do doctors who use structured documentation, says a study published online May 19 in the Journal of the American Medical Informatics Association.

…

Quality of care was significantly better on three measures for doctors who took structured notes compared with physicians who used the other two styles, the study said.

…

Researchers found that quality of care was significantly worse on three outcome measures for doctors who dictated their notes compared with physicians who used the other two documentation styles.

Vitalis presentation

Vi spelade in vår Vitalis presentation på video för att den skulle kunna avnjutas (eller nåt) över hela riket:

FKs funktionsnedsättning

En av dom saker som ger oss allmänläkare mardrömmar är försäkringskassans avvisande av sjukintyg. Det är en random process där man, som läkare, aldrig tycks kunna göra rätt. Ibland är det för kort, ibland för enkelt, ibland för subjektivt, ibland för invecklat. Skriver man för enkelt anses det inte objektivt, skriver man objektivt förstår dom inte alltid vad man säger och vill att man skriver enklare. Kärnpunkten är vår beskrivning av precis hur sjukdomen hindrar patienten i precis det här yrket från att jobba. Den är tydligen väldigt svårt att göra rätt.

Då tänkte jag så här… om man koderar yrket enligt en enkel tabell (finns det inte en sådan?) så kan man spara varje skriven “funktionsnedsättning” text under nycklarna “yrke” och “diagnos” i en gemensam databas. Diagnosen är redan koderad enligt ICD-10 så den är inget problem. När databasen fått en del texter, kan man börja ge läkaren en lista på sådana texter för just det yrket och den sjukdomen och låta honom välja en. Varje vald text får en uptick på +1. Texterna rangordnas i listan enligt dessa poäng. Kalla det en “like” om du vill.

Varje gång FK underkänner ett intyg får den använda texten en downtick på -5 poäng. I längden kommer man alltså genom Darwinistisk selektion att ha dom texter FK gillar bäst överst i listan.

I nästa steg ger vi FK online tillgång till denna “funktionsnedsättningsdatabas” så behöver vi inte längre välja texten utan låta dom själva göra det utifrån nyckelparet diagnos/yrke. Sisådär, problemet löst!

Socialstyrelsen har gjort en lista på rekommendationer för sjukskrivningar som vagt påminner om detta, men felet där är att man låtit en kommitté göra listan och att den inte dynamiskt anpassar sig efter använding. En lista som jag föreslår är byggd genom “crowdsourcing” och kommer att vara mycket billigare, effektivare och mer komplett än vad man någonsin kan producera från centralt håll.

När listan väl finns har man en guldgruva (guldgruvor är i modet nu!) av information att göra studier på. Precis vilka yrken har mest besvär av sjukdom eller följder av sjukdom X, etc.

Det här kommer vi naturligtvis att stoppa in i iotaMed! Vi har ju redan principen av sjukskrivningar styrda från riktlinjer i programmet, så det här blir ganska enkelt att lägga till.

minIota

Så har vi då en app till i iotaMed sviten, nämligen “minIota” (eller varför inte “miniIota” som någon i familjen sa av misstag). minIota är patientens app och går på en iPad, men inget hindrar att man gör en liknande app som är webbaserad.

När läkaren som använder iotaMed aktiverar ett “patientformulär”, så kommer det formuläret att bli tillgängligt för patienten. Formuläret kan innehålla texter, som t.ex. anvisningar för diet, sårskötsel, samt även data ur journalen, men då i en för patienten mer tillgänglig form. Formuläret kan även innehålla fält som patienten fyller i och som vid nästa besök hos läkaren automatiskt visas och sedan kan importeras till journalen. Dessa patientdata kommer i så fall att bli en del av journaldata, och t.o.m. utgöra del av kvalitetsregisterdata om det är konfigurerat på det viset.

Tillgången till patientens formulär och vägen tillbaka till iotaMed och därmed den klassiska journalen, kan ganska enkelt göras via “mina vårdkontakter” eller annan dylik tjänst.

Jag har gjort en video presentation av detta:

Vitalis

Tredje och sista Vitalisdagen gjorde vi vår presentation. Den finns också på video på YouTube, naturligtvis.

Mottagandet var väldigt positivt och publiken ställde precis dom frågor jag ville att dom skulle ställa, tackar för det. En lustig händelse som inte syns eller hörs på videon: i första scenariot på video går jag ju igenom en diabetes typ 2, gör utredningen, efter det årskontrollen, till sist kvalitetsregisterrapporteringen. Jag visar hur kvalitetsregisterhanteringen egentligen blir nästan trivial eftersom ett rätt strukturerat “issue” automatiskt tar hand om den saken och så avslutar jag i den förinspelade videon med meningen “Det var väl elegant?” varpå någon på en av de första raderna utropar helt spontant “Ja, verkligen!”.

Fotledstrauma

Anders Westermark, ortoped UAS, visar i den här videon hur man handlägger fotledstrauma med iotaMed. Anders kommer att ge en del av vår presentation på Vitalis om tre dagar (7/4 11:45-12:30 aud. A6).

iotaEd: vår issue editor

Så har vi en produkt till i utveckling, nämligen iotaEd, en desktop editor för issues som tillåter allmänläkare och specialister att skapa egna issues. Det är ju därifrån vårdprogrammen kommer nu och det är ju därifrån “issues” också ska komma, eftersom de ju i grunden är vårdprogram.

En videopresentation finns naturligtvis för denna också.

Tolvan får också diabetes och bryter foten

Nu har jag två demo filmer till om iotaMed®. Betydligt mer data och mer operationer. Spoiler: kvalitetsregister är en baggis. Man kan bäst se dom i omvänd ordning, för jag gjorde dem i fel ordning, så blir det rätt.